Forecasting the Growth of Generative AI Usage, Compute Requirements, and Infrastructure Costs

Description

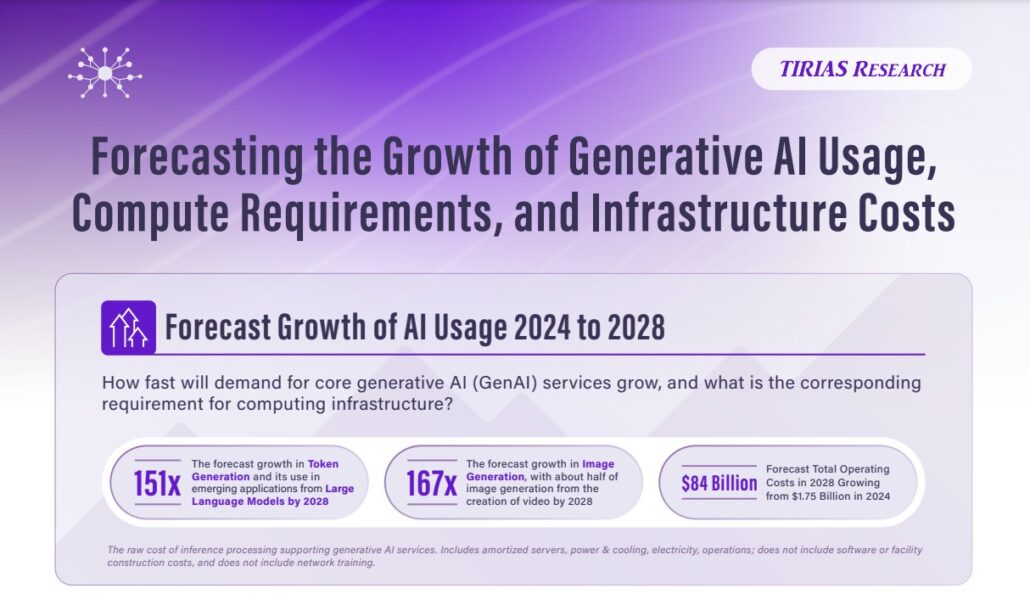

The Tirias Research GenAI FTCO Service provides insight into the demand, compute requirements, compute capacity, and TCO of generative AI inference. The forecast and TCO model includes extensive knowledge of silicon performance and roadmaps, data center operational cost models, application benchmarks, and market research. This public forecast summary is intended to help the industry better understand the pace of GenAI growth and the potential impact on the technology industry and society. Service customers employ the model to build scenarios at multiple layers, driving their product and corporate strategy. You can email Simon Solotko at simon@tiriasresearch.com, Jim McGregor at jim@tiriasresearch.com, or Francis Sideco at francis@tiriasresearch.com to arrange an overview.

Lattice SensAI 4.1: Tools and IP Transform Low-Power FPGAs into Intelligent AI/ML Edge Computing Engines

Description

Explosive growth of edge devices is driving the development of new applications that transform massive amounts of raw data into useful, actionable information for real-time decision making. Lattice’s sensAI 4.1 solution stack provides ready-to-use AI/ML tools, IP cores, hardware platforms, reference designs and demos, and custom design services to bring these edge devices and applications to market quickly. This White Paper explores how the new Lattice sensAI 4.1 solution stack expands developers’ abilities to use Lattice FPGAs for a variety of edge AI/ML applications.

Edge Impulse Accelerates MLOPS With EON Tuner

Description

Building machine learning (ML) into embedded devices is more than a one-time event. Integrating machine learning is a process requiring iterative improvements overtime. To support a continuous process of training deployment feedback and retraining, requires machine learning operations (MLOps) to be an integral part of any toolchain. As a result, it is critical that MLOps best practices are being followed even when developing ML for low-power embedded devices. This white paper explores how Edge Impulse will accelerate MLOps using the new “EON” capabilities.

Introducing Lossless Audio Streaming For Bluetooth and What it Means for Consumers

Description

With streaming service providers like Tidal, Qobuz, Deezer, Amazon Music, and now Apple offering lossless audio, high-resolution audio is going mainstream. While audiophiles might have been the vanguard of this dream for lossless and higher quality, interest in better audio experiences has been growing across a variety of fronts. Think, for instance, about what advanced audio means for streaming movies, TV, gaming, virtual and augmented reality, or even for video conferencing, especially now that remote work and learning have grown so dramatically. While that sounds exciting and transformative, there are a number of important caveats to keep in mind and limitations to overcome in order to assure you are enjoying the full benefits of lossless and hi-res audio when using wireless audio devices. The company addressing these issues across the entire audio chain is Qualcomm.

Using Machine Learning in Embedded Systems

Description

Embedded processing systems surround us and go where we go. At home, they can be in the coffeemaker, wash machine, refrigerator, thermostat, television, even in your toothbrush. In a factory, you can find them in motors, automated machines, robots, even the soda machine. In a vehicle, they control various items such as the windows, air conditioning, radio/infotainment system, collision detection, and rear-view mirror. Most of those devices perform relatively simple functions and are not connected to the Internet. But the future for these embedded devices is about becoming more intelligent and more connected. By connecting embedded devices, we get the ability to orchestrate multiple devices and make them more responsive to our needs. But often these embedded systems are constrained by cost, power, available memory, and/or compute performance. This paper will explore how new software tools will allow any designer to add machine learning to any edge embedded system.

Interactive Applications Powered by Video at the Cloud Edge

Description

Reducing the cost to deliver low latency is critical to emerging interactive edge services. Specialized video encoding ASICs at the cloud edge, at regional points of presence, provide low latency, high density video encoding for application streaming. Encoding ASICs from NETINT and application servers at the cloud edge provide a combination of low latency and high density making it economical to scale interactive application services at the cloud edge. This paper presents a TCO and environmental impact model for the placement of interactive streaming services at the cloud edge. It compares software on CPU, GPU and ASIC based approaches.

The Emergence of Cloud Mobile Gaming

Description

Cloud mobile gaming has a unique set of technical and economic challenges – the scale is massive, the network is cellular, and the distribution of mobile apps is virtually impossible outside of established app stores. The existing codebase for mobile apps is centric to the iOS and Android platforms, encouraging server-side implementations of the mobile technology stack. The infrastructure for mobile streaming utilizes Arm-based servers, virtualized multi-user graphics acceleration, and high performance, low latency video streaming. NETINT has developed video encoders that deliver high visual fidelity and low latency ideal for game streaming. The company’s dedicated video encoders and new Edgefusion combined storage + encoders promise to jumpstart mobile game streaming by combining the highest density, low TCO, and excellent performance delivering game streams.

Smart Inference Devices: The Wave of Perceptive Electronics Powered by Machine Learning

Description

The future of consumer device innovation lies in creating a new internet of smarter devices. This future will be powered by more perceptive sensors capable of local machine learning inference. Utilizing these sensors and multiple inference networks concurrently will drive advancement in virtually all aspects of smart device functionality and user experience. Privacy can be improved by running inference locally, with only the most deliberate transmission of user data and sensor feeds to the cloud. Perceive Ergo is a new inference processor designed for small devices delivering a 10X improvement in performance per watt over today’s world-class inference processors, with the potential to bring high accuracy, data center-class inference to small, low power, high volume consumer devices.

Second Generation AMD EPYC Processor Enhanced Cache and Memory Architecture

Description

Building off the success of the first generation, AMD has evolved the multi-die strategy, as the company now focused on a different form of multi-die packaging solutions in the second generation EPYC server processor. This new solution, which AMD refers to as hybrid multi-die, allows AMD to not only divide a potentially large die into small interconnected dies but also specific functions can be fabricated in the most appropriate process node based on required cost and performance. This agile hybrid multi-die architecture decoupled the CPU and cache complex and I/O innovation paths, giving AMD the ability to deliver the best process technology for CPU cores and letting I/O circuitry develop at its own rate.

Lattice Crosslink-NX: Embedded Vision Processing at the Edge

Description

Lattice Semiconductor created the CrossLinkPlus family of specialized, small-footprint, low-power FPGAs to address the latest trends in video processing: mixing multiple sensors and displays, higher resolution video, multiple interfaces, and Edge AI processing. Many of these intelligent Edge devices use image sensors to support various embedded vision applications and include AI/ML-driven applications such as object counting or presence detection. Supporting embedded vision applications at the Edge, however, requires devices that offer certain design and performance characteristics: low power consumption, high performance, high reliability, and a small form factor. This white paper explores how the Lattice CrossLinkPlus family addresses those needs.

Arm and Siemens Partner to Change the Future of Automotive Semiconductor Design

Description

Arm and Siemens are partnering to address the automotive revolution that will lead to autonomous vehicles. The integration of Arm’s vast IP library into the Siemens PAVE360 digital twin simulation platform will allow for the design of optimized SoCs to address the needs of future generations of vehicles while reducing the development time of the entire vehicle. The platform could also lead to the development of custom IP for automotive and other applications changing the way semiconductors, electronics, and all related platforms are designed in the future.

The Ampere eMAG Cloud Server Is The Better Arm Choice

Description

There is a growing number of choices beyond Intel’s Xeon processors for cloud services and choice is good. Numerous Arm server vendors are entering or are established in the server market. In addition, Amazon has developed its own Arm-based server chip called Graviton. The advantages of Arm compatible cores are lower power per core, more cores per area, design flexibility to build custom versions, and an established software ecosystem. One leading Arm server company is Ampere Computing. Ampere Computing is led by CEO Renee James, the former President of Intel, and leverages the many years of engineering talent from former AMD, Applied Micro, Cavium, Intel, and Qualcomm engineers. In addition to its deep expertise, Ampere offers some unique advantages in cloud servers – more cores, larger memory support, and better bandwidth.

The 2nd Generation AMD EPYC Processor Redefines Data Center Economics

Description

The last time AMD disrupted the data center was with the introduction of the 64-bit Opteron processor back in 2004. Then in July 2017, AMD launched its EPYC processor that began the next revolution in processor and data center design. The first generation EPYC processor offered more cores, more bandwidth, and more I/O than a competing Intel Xeon. This year, AMD is upping the ante once again with a second-generation EPYC processor, codenamed “Rome.”

AV Simulation Extends to Silicon

Description

Technology advancements are leading to complex mechatronic systems capable of interacting with the physical world through advanced sensor technology and artificial intelligence (AI). These systems are capable of functions ranging from basic tasks up to complete autonomous operation, and they can perform these tasks as well or better than humans. However, designing, training, verifying, and validating these complex systems requires extensive simulation platforms and processing solutions that are pushing the limits of existing platforms. As a result, there is a need for closed-loop simulation platforms that not only provide the virtual environment required to develop the next generation of mechatronic systems but also to develop the processing solutions to control them.

NVIDIA PLASTER Deep Learning Framework

Description

“PLASTER” encompasses seven major challenges for delivering AI-based services:

- Programmability

- Latency

- Accuracy

- Size of Model

- Throughput

- Energy Efficiency

- Rate of Learning

This paper explores each of these AI challenges in the context of NVIDIA’s DL solutions.

AMD Optimizes EPYC Memory with NUMA

Description

TIRIAS Research has published a new white paper, AMD Optimizes EPYC Memory with NUMA, that looks at how AMD designed the EPYC server chip balancing between system cost, die area, memory bandwidth, and memory latency. AMD achieved that goal by using the efficiencies of multichip module (MCM) technology and the company’s new Infinity Fabric (IF) technology.

Dell EMC Accelerates Pace in Machine Learning

Description

Dell EMC’s joint development and Volta launch agreement with NVIDIA has helped both Dell EMC and NVIDIA. Dell EMC has early access to NVIDIA architectural improvements, while NVIDIA has access to Dell EMC’s enterprise datacenter marketing acumen and reach.

The Instantaneous Cloud: Emerging Consumer Applications of 5G Wireless Networks

Description

TIRIAS Research has published a new white paper, The Instantaneous Cloud: Emerging Consumer Applications of 5G Wireless Networks sponsored by NGCodec. TIRIAS Research tracks the intersection of emerging technology and the invention of new applications. Emerging 5G networks will carry consumer context and input into the cloud, and stream contextual or graphically intense experiences back down to users with low latency, nearly eliminating our ability to perceive lag in even the most sophisticated and immersive user experiences. New applications that take advantage of fast response and high sustained bandwidth can form an instantaneous cloud that provides visually immersive experiences directly to consumers. Key technologies include cloud experience engines, graphics rendering, and video encoding. Architectures include cloud-edge computing with servers designed specifically for streaming high-performance graphics experiences, virtualized and available to mobile users from the cloud.